Notes on fastai Book Ch. 1

- A Brief History of Neural Networks

- How to Learn Deep Learning

- What is Machine Learning?

- Create an Image Classifier

- Inspecting Deep Learning Models

- Applying Image Models to Non-Image Tasks

- Other Deep Learning Applications

- Jargon

- References

A Brief History of Neural Networks

The Artificial Neuron

Developed in 1943 by Warren McCulloch, a neurophysiologist, and Walter Pitts, a logician

A simplified model of a real neuron can be represented using simple addition and thresholding

Original Paper: A Logical Calculus of the Ideas Immanent in Nervous Activity

- Neural events and the relations among them can be treated by means of propositional logic due to the “all-or-none” character of nervous activity

Mark 1 Perceptron

- The first device based on the principles of artificial neurons

- Designed for image recognition

- Able to recognize simple shapes

- Developed by a psychologist named Frank Rosenblatt

- Gave the artificial neuron the ability to learn

- Invented the perceptron algorithm in 1958

- Wrote The Design of an Intelligent Automaton

Perceptrons

- Written by Marvin Minsky, an MIT professor and Seymour Papert in 1969

- Showed that a single layer of Mark 1 Perceptron devices was unable to learn some simple but critical math functions like XOR

- Also showed this limitation could be addressed by using multiple layers

- This solution was not widely recognized by the global academic community

Parallel Distributed Processing (PDP)

- Written by David Rumelhart, James McClelland, and the PDP Research Group

- Released in 1986 by MIT Press

- Perhaps the most pivotal work in neural networks in the last 50 years

- Posited that traditional computer programs work very differently from brains and that this might be why computer programs were so bad at doing things that brains find easy

- Authors claimed the PDP approach was closer to how the brain worked

- Laid out an approach that is very similar to today’s neural networks

- Defined parallel distributed processing as requiring the following:

- A set of processing units

- A state of activation

- An output function for each unit

- A pattern of connectivity among units

- A propagation rule for propagating patterns of activities through the network of connectivities

- An activation rule for combining the inputs impinging on a unit with the current state of the unit to produce an output for the unit

- A learning rule whereby patterns of connectivity are modified by experience

- An environment within which the system must operate

- Modern neural networks handle each of these requirements

- Parallel distributed processing: Explorations in the microstructure of cognition

1980s-1990s

- Most models were built with a second layer of neurons

- Neural networks were used for real, practical projects

- A misunderstanding of the theoretical issues held back the field

- In theory, two-layer models could approximate any mathematical function

- In practice, multiple layers are needed to get good, practical performance

How to Learn Deep Learning

Teaching Approach

- Teach the whole game

- Build a foundation of intuition through application then build on it with theory

- Show students how individuals pieces of theory are combined in a real-world application

- Teach through examples

- Provide a context and a purpose for abstract concepts

- Making Learning Whole by David Perkins

- Describes how teaching any subject at any level can be made more effective if students are introduced to the “whole game,” rather than isolated pieces of a discipline.

- A Mathematician’s Lament by Paul Lockhart

- Imagines a nightmare world where music and art are taught the way math is taught

- Students spend years doing rote memorization and learning dry, disconnected fundamentals that teachers claim will pay off later

Learning Approach

- Learn by doing

- The hardest part of deep learning is artisanal

- How do you know if you have enough data?

- Is the data in the right format?

- Is your model trained properly?

- If your model is not trained properly, what should you do about it?

- Start with small projects related to your hobbies and passions, before tackling a big project

- Be curious about problems you encounter

- Focus on underlying techniques and how to apply them over specific tools or software libraries

- Experiment constantly

What is Machine Learning?

- another way to get computers to complete a desired task

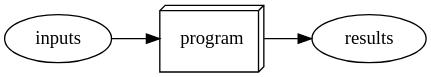

Traditional Programming

- Must explicitly code the exact instructions required to complete a task

- Difficult when you do not know the exact steps

Machine Learning

Must show the computer examples of the desired task and have it learn how to perform the task through experience

- Difficult when you do not have sufficient data

Invented by IBM researcher Arthur Samuel in 1949

- Created a checkers-player program that beat the Connecticut state champion in 1961

- Wrote “Artificial Intelligence: A Frontier of Automation” in 1962

- Computers are giant morons and need to be told the exact steps required to solve a problem

- Show the computer examples of the problem to solve and let if figure out how to solve it itself

- Arrange some automatic means of testing the effectiveness of any current weight assignment and provide a mechanism so as to maximize the actual performance

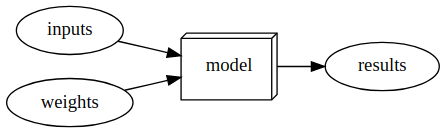

- Introduced multiple concepts

- The idea of “weight assignment”

- Weights are just variables

- A weight assignment is a particular choice of values for those variables

- Weight values define how the program will operate

- The fact every weight assignment has some “actual performance”

- How accurate is the image classifier?

- How good is the checkers-player program at playing checkers?

- The requirement there be an “automatic means” of testing that performance

- Compare performance with the current weight values to the desired result

- The need for a “mechanism” for improving the performance by changing the weight assignments

- Update the weight values based on the performance with the current values

- The idea of “weight assignment”

What is a Neural Network?

- A particular kind of machine learning model

- Flexible enough to solve a wide variety of problems just by finding the right weight values

- Universal Approximation Theorem: shows that a neural network can, in theory, solve any problem to any level of accuracy

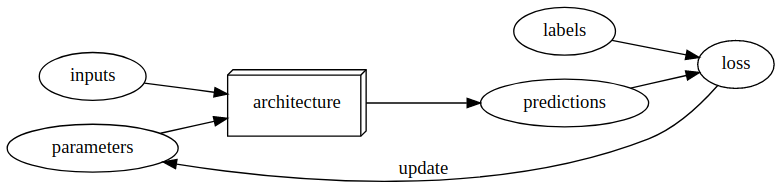

- Stochastic Gradient Descent: a general way to automatically update the weights of a neural network to make it improve at any given task

Deep learning

- A computer science technique to extract and transform data by using multiple layers of neural networks

- Output from the previous layer serves as input for the next

- Each layer progressively refines their input

- A neural network learns to perform a specified task through training its layers using algorithms that minimize their errors and improve their accuracy

Inherent Limitations of Machine Learning

- A model cannot be created without data

- A model can learn to operate on only the patterns seen in the input data used to train it

- This learning approach only creates predictions, not recommended actions

- can result in a significant gap between organizational goals and model capabilities

- Need labels for each example input in the training data

- The way a model interacts with its environment can create feedback loops that amplify existing biases

- A predictive policing algorithm that is trained on data from past arrests will learn to predict arrests rather than to predict crime

- Law enforcement officers using the model might decide to focus policing activity in areas where previous arrests were made, resulting in more arrests in those areas

- The data from these new arrests are then fed into the model, increasing its bias towards those areas

Create an Image Classifier

Import Dependencies

# Import fastai computer vision library

# Includes functions and classes to create a wide variety of computer vision models

from fastai.vision.all import *Load Training Data

# Download and extract the training dataset

# base directory: '~/.fastai'

# returns a Path object: https://docs.python.org/3/library/pathlib.html

path = untar_data(URLs.PETS)/'images'

print(type(path))

print(path)<class 'pathlib.PosixPath'>

/home/innom-dt/.fastai/data/oxford-iiit-pet/imagesOxford-IIT Pet Dataset: contains 7,390 images of cats and dogs from 37 breeds

URLs.PETS'https://s3.amazonaws.com/fast-ai-imageclas/oxford-iiit-pet.tgz'!ls ~/.fastai/dataannotations coco_sample oxford-iiit-pet!ls ~/.fastai/data/oxford-iiit-pet/images| head -5Abyssinian_100.jpg

Abyssinian_100.mat

Abyssinian_101.jpg

Abyssinian_101.mat

Abyssinian_102.jpg

ls: write error: Broken pipe# Returns true if the first letter in the string is upper case

def is_cat(x): return x[0].isupper()str.isupper<method 'isupper' of 'str' objects>is_cat("word")Falseis_cat("Word")Trueis_cat("woRd")False# Cat breeds are upper case

!ls ~/.fastai/data/oxford-iiit-pet/images/[[:upper:]]* | head -5/home/innom-dt/.fastai/data/oxford-iiit-pet/images/Abyssinian_100.jpg

/home/innom-dt/.fastai/data/oxford-iiit-pet/images/Abyssinian_100.mat

/home/innom-dt/.fastai/data/oxford-iiit-pet/images/Abyssinian_101.jpg

/home/innom-dt/.fastai/data/oxford-iiit-pet/images/Abyssinian_101.mat

/home/innom-dt/.fastai/data/oxford-iiit-pet/images/Abyssinian_102.jpg

ls: write error: Broken pipe# Dog breeds are lower case

!ls ~/.fastai/data/oxford-iiit-pet/images/[[:lower:]]* | head -5/home/innom-dt/.fastai/data/oxford-iiit-pet/images/american_bulldog_100.jpg

/home/innom-dt/.fastai/data/oxford-iiit-pet/images/american_bulldog_101.jpg

/home/innom-dt/.fastai/data/oxford-iiit-pet/images/american_bulldog_102.jpg

/home/innom-dt/.fastai/data/oxford-iiit-pet/images/american_bulldog_103.jpg

/home/innom-dt/.fastai/data/oxford-iiit-pet/images/american_bulldog_104.jpg

ls: write error: Broken pipe# Create a dataloader to feed image files from dataset to the model

# Reserves 20% of the available images for the validation set

# Sets the random seed to get consistent results in each training session

# Uses the is_cat() function to identify image classes

# - Upper case will be the first class

# Resizes and crops images to 224x224

# Uses 8 cpu workers to load images during training

dls = ImageDataLoaders.from_name_func(

path, get_image_files(path), valid_pct=0.2, seed=42,

label_func=is_cat, item_tfms=Resize(224), num_workers=8)print(len(get_image_files(path)))

img_files = get_image_files(path)

for i in range(5):

print(img_files[i])7390

/home/innom-dt/.fastai/data/oxford-iiit-pet/images/Birman_121.jpg

/home/innom-dt/.fastai/data/oxford-iiit-pet/images/shiba_inu_131.jpg

/home/innom-dt/.fastai/data/oxford-iiit-pet/images/Bombay_176.jpg

/home/innom-dt/.fastai/data/oxford-iiit-pet/images/Bengal_199.jpg

/home/innom-dt/.fastai/data/oxford-iiit-pet/images/beagle_41.jpgdls.after_itemPipeline: Resize -- {'size': (224, 224), 'method': 'crop', 'pad_mode': 'reflection', 'resamples': (2, 0), 'p': 1.0} -> ToTensordls.after_batchPipeline: IntToFloatTensor -- {'div': 255.0, 'div_mask': 1}Train a Model

Randomly Initialized Weights

learn = cnn_learner(dls, resnet34, metrics=accuracy, pretrained=False)

learn.fine_tune(1)| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 1.004878 | 0.697246 | 0.662382 | 00:11 |

| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.745436 | 0.612512 | 0.688769 | 00:14 |

Pretrained Weights

# removes the last layer of the of the pretrained resnet34 and

# replaces it with a new output layer for the target dataset

learn = cnn_learner(dls, resnet34, metrics=accuracy, pretrained=True)

learn.fine_tune(1)| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.162668 | 0.023766 | 0.989851 | 00:11 |

| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.061914 | 0.014920 | 0.993234 | 00:14 |

# Build a convolutional neural network-style learner from the dataloader and model architecture

cnn_learner<function fastai.vision.learner.cnn_learner(dls, arch, normalize=True, n_out=None, pretrained=True, config=None, loss_func=None, opt_func=<function Adam at 0x7f0e87aa2040>, lr=0.001, splitter=None, cbs=None, metrics=None, path=None, model_dir='models', wd=None, wd_bn_bias=False, train_bn=True, moms=(0.95, 0.85, 0.95), cut=None, n_in=3, init=<function kaiming_normal_ at 0x7f0ed3b4f820>, custom_head=None, concat_pool=True, lin_ftrs=None, ps=0.5, first_bn=True, bn_final=False, lin_first=False, y_range=None)># ResNet-34 model from [Deep Residual Learning for Image Recognition](https://arxiv.org/pdf/1512.03385.pdf)

# the pretrained version has already been trained to recognize

# a thousand different categories on 1.3 million images

resnet34<function torchvision.models.resnet.resnet34(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> torchvision.models.resnet.ResNet># based on https://github.com/sksq96/pytorch-summary/blob/master/torchsummary/torchsummary.py

def get_total_params(model, input_size, batch_size=-1, device='cuda', dtypes=None):

def register_hook(module):

def hook(module, input, output):

class_name = str(module.__class__).split(".")[-1].split("'")[0]

module_idx = len(summary)

m_key = f"{class_name}-{module_idx + 1}"

summary[m_key] = OrderedDict()

params = 0

if hasattr(module, "weight") and hasattr(module.weight, "size"):

params += torch.prod(torch.LongTensor(list(module.weight.size())))

if hasattr(module, "bias") and hasattr(module.bias, "size"):

params += torch.prod(torch.LongTensor(list(module.bias.size())))

summary[m_key]["nb_params"] = params

if (

not isinstance(module, nn.Sequential)

and not isinstance(module, nn.ModuleList)

and not (module == model)

):

hooks.append(module.register_forward_hook(hook))

if device == "cuda" and torch.cuda.is_available():

dtype = torch.cuda.FloatTensor

else:

dtype = torch.FloatTensor

# multiple inputs to the network

if isinstance(input_size, tuple):

input_size = [input_size]

# batch_size of 2 for batchnorm

x = [torch.rand(2, *in_size).type(dtype) for in_size in input_size]

# create properties

summary = OrderedDict()

hooks = []

# register hook

model.apply(register_hook)

# make a forward pass

model(*x)

# remove these hooks

for h in hooks:

h.remove()

total_params = 0

for layer in summary:

total_params += summary[layer]["nb_params"]

return total_paramsinput_shape = (3, 224, 224)print(f"ResNet18 Total params: {get_total_params(resnet18().cuda(), input_shape):,}")

print(f"ResNet34 Total params: {get_total_params(resnet34().cuda(), input_shape):,}")

print(f"ResNet50 Total params: {get_total_params(resnet50().cuda(), input_shape):,}")

print(f"ResNet101 Total params: {get_total_params(resnet101().cuda(), input_shape):,}")

print(f"ResNet152 Total params: {get_total_params(resnet152().cuda(), input_shape):,}")ResNet18 Total params: 11,689,512

ResNet34 Total params: 21,797,672

ResNet50 Total params: 25,557,032

ResNet101 Total params: 44,549,160

ResNet152 Total params: 60,192,808# 1 - accuracy

error_rate<function fastai.metrics.error_rate(inp, targ, axis=-1)># 1 - error_rate

accuracy<function fastai.metrics.accuracy(inp, targ, axis=-1)>Use Trained Model

# Upload file(s) from browser to Python kernel as bytes

uploader = widgets.FileUpload()

uploaderFileUpload(value={}, description='Upload')# Open an `Image` from path `fn`

# Accepts pathlib.Path, str, torch.Tensor, numpy.ndarray and bytes objects

img = PILImage.create(uploader.data[0])

print(f"Type: {type(img)}")

imgType: <class 'fastai.vision.core.PILImage'>

# Prediction on `item`, fully decoded, loss function decoded and probabilities

is_cat,_,probs = learn.predict(img)

print(f"Is this a cat?: {is_cat}.")

print(f"Probability it's a cat: {probs[1].item():.6f}")Is this a cat?: False.

Probability it's a cat: 0.000024uploader = widgets.FileUpload()

uploaderFileUpload(value={}, description='Upload')img = PILImage.create(uploader.data[0])

img

is_cat,_,probs = learn.predict(img)

print(f"Is this a cat?: {is_cat}.")

print(f"Probability it's a cat: {probs[1].item():.6f}")Is this a cat?: True.

Probability it's a cat: 1.000000Inspecting Deep Learning Models

- It is possible to inspect deep learning models and get rich insights from them

- can still be challenging to fully understand

- Visualizing and Understanding Convolutional Networks

- published by PhD student Matt Zeiler and his supervisor Rob Fergus in 2013

- showed how to visualize the neural network weights learned in each layer of a model

- discovered the early layers in a convolutional neural network recognize edges and simple patterns which are combined in later layers to detect more complex shapes

- very similar to the basic visual machinery in the human eye

Applying Image Models to Non-Image Tasks

- A lot of data can be represented as images

- Sound can be converted to a spectrogram

- spectrogram: a chart that shows the amount of each frequency at each time in an audio file

- Time series can be converted to an image by plotting the time series on a graph

- Various transformations available for time series data

- fast.ai student Ignacio Oguiza created images from time series data using Gramian Angular Difference Field (GADF)

- an image obtained from a time series, representing some temporal correlation between each time point

- fast.ai student Gleb Esman converted mouse movements and clicks to images for fraud detection

- Malware Classification with Deep Convolutional Neural Networks

- the malware binary file is divided into 8-bit sequences which are then converted to equivalent decimal values

- the decimal values are used to generate a grayscale image the represents the malware sample

- fast.ai student Ignacio Oguiza created images from time series data using Gramian Angular Difference Field (GADF)

- Various transformations available for time series data

- It is often a good idea to represent your data in a way that makes it as easy as possible to pull out the most important components

- In a time series, things like seasonality and anomalies are most likely to be of interest

- Rule of thumb: if the human eye can recognize categories from images, then a deep learning model should be able to as well

Other Deep Learning Applications

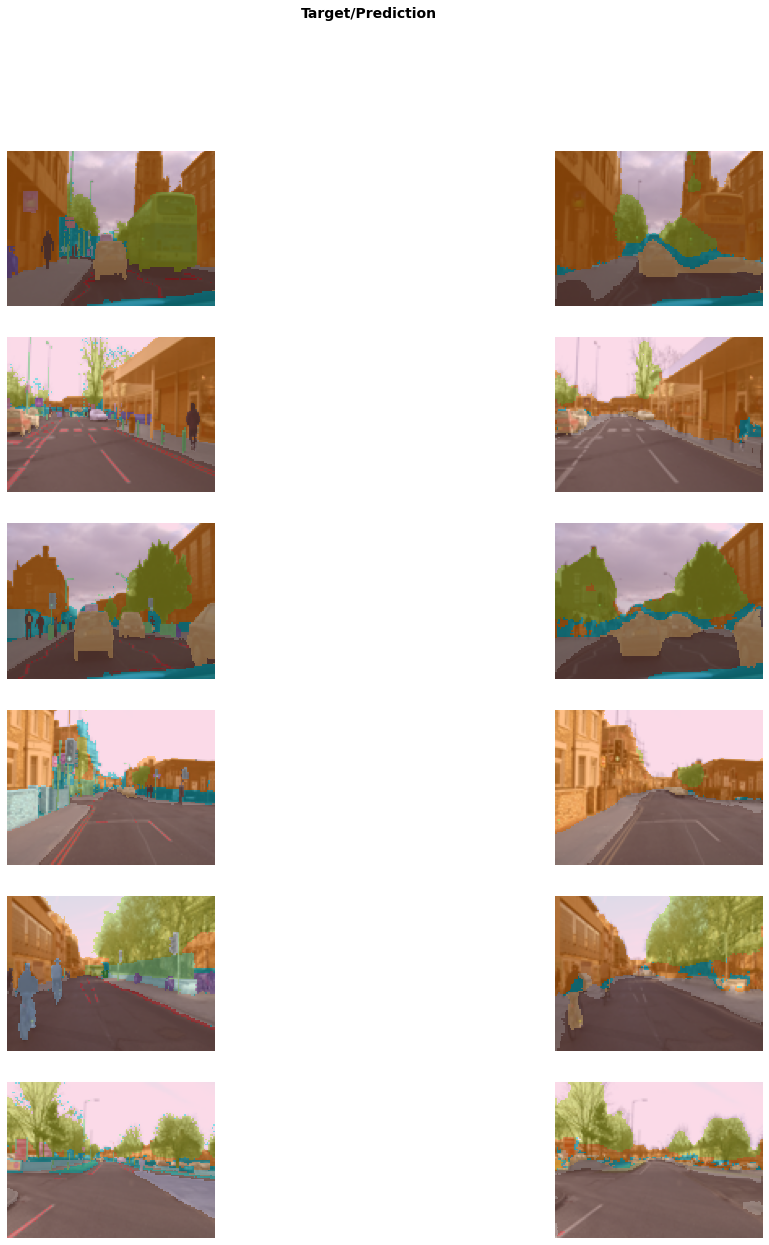

Image Segmentation

- training a model to recognize the content of every single pixel in an image

path = untar_data(URLs.CAMVID_TINY)

print(path)/home/innom-dt/.fastai/data/camvid_tiny!ls $pathcodes.txt images labels# Basic wrapper around several `DataLoader`s with factory methods for segmentation problems

dls = SegmentationDataLoaders.from_label_func(

path, bs=8, fnames = get_image_files(path/"images"),

label_func = lambda o: path/'labels'/f'{o.stem}_P{o.suffix}',

codes = np.loadtxt(path/'codes.txt', dtype=str), seed=42, num_workers=8

)img_files = get_image_files(path/"images")

print(len(img_files))

for i in range(5):

print(img_files[i])100

/home/innom-dt/.fastai/data/camvid_tiny/images/0016E5_08155.png

/home/innom-dt/.fastai/data/camvid_tiny/images/Seq05VD_f03210.png

/home/innom-dt/.fastai/data/camvid_tiny/images/Seq05VD_f03060.png

/home/innom-dt/.fastai/data/camvid_tiny/images/Seq05VD_f03660.png

/home/innom-dt/.fastai/data/camvid_tiny/images/0016E5_05310.pngpath/'labels'/f'{img_files[0].stem}_P{img_files[0].suffix}'Path('/home/innom-dt/.fastai/data/camvid_tiny/labels/0016E5_08155_P.png')# Build a unet learner

learn = unet_learner(dls, resnet34, pretrained=True)

learn.fine_tune(8)| epoch | train_loss | valid_loss | time |

|---|---|---|---|

| 0 | 2.914330 | 2.284680 | 00:01 |

| epoch | train_loss | valid_loss | time |

|---|---|---|---|

| 0 | 1.906939 | 1.415985 | 00:01 |

| 1 | 1.628720 | 1.185889 | 00:01 |

| 2 | 1.454888 | 1.024575 | 00:01 |

| 3 | 1.290813 | 0.921251 | 00:01 |

| 4 | 1.152427 | 0.809383 | 00:01 |

| 5 | 1.034114 | 0.793250 | 00:01 |

| 6 | 0.941492 | 0.782535 | 00:01 |

| 7 | 0.869773 | 0.778228 | 00:01 |

learn.show_results(max_n=6, figsize=(18,20))

Natural Language Processing (NLP)

- generate text

- translate from one language to another

- analyze comments

- label words in sentences

from fastai.text.all import *IMDB Large Moview Review Dataset

path = untar_data(URLs.IMDB)

pathPath('/home/innom-dt/.fastai/data/imdb')!ls $pathimdb.vocab README test tmp_clas tmp_lm train unsup# Basic wrapper around several `DataLoader`s with factory methods for NLP problems

dls = TextDataLoaders.from_folder(path, valid='test', bs=64, seed=42, num_workers=8)len(dls.items)25000dls.after_iter<bound method after_iter of <fastai.text.data.SortedDL object at 0x7f0f493cb9d0>># A `DataLoader` that goes throught the item in the order given by `sort_func`

SortedDL!ls $path/trainlabeledBow.feat neg pos unsupBow.feat!ls $path/train/pos | wc -l12500!ls $path/train/pos | head -50_9.txt

10000_8.txt

10001_10.txt

10002_7.txt

10003_8.txt

ls: write error: Broken pipe!cat $path/train/pos/0_9.txtBromwell High is a cartoon comedy. It ran at the same time as some other programs about school life, such as "Teachers". My 35 years in the teaching profession lead me to believe that Bromwell High's satire is much closer to reality than is "Teachers". The scramble to survive financially, the insightful students who can see right through their pathetic teachers' pomp, the pettiness of the whole situation, all remind me of the schools I knew and their students. When I saw the episode in which a student repeatedly tried to burn down the school, I immediately recalled ......... at .......... High. A classic line: INSPECTOR: I'm here to sack one of your teachers. STUDENT: Welcome to Bromwell High. I expect that many adults of my age think that Bromwell High is far fetched. What a pity that it isn't!!ls $path/train/neg | wc -l12500!ls $path/train/neg | head -50_3.txt

10000_4.txt

10001_4.txt

10002_1.txt

10003_1.txt

ls: write error: Broken pipe!cat $path/train/neg/0_3.txtStory of a man who has unnatural feelings for a pig. Starts out with a opening scene that is a terrific example of absurd comedy. A formal orchestra audience is turned into an insane, violent mob by the crazy chantings of it's singers. Unfortunately it stays absurd the WHOLE time with no general narrative eventually making it just too off putting. Even those from the era should be turned off. The cryptic dialogue would make Shakespeare seem easy to a third grader. On a technical level it's better than you might think with some good cinematography by future great Vilmos Zsigmond. Future stars Sally Kirkland and Frederic Forrest can be seen briefly.# Create a `Learner` with a text classifier

learn = text_classifier_learner(dls, AWD_LSTM, drop_mult=0.5, metrics=accuracy)

learn.fine_tune(4, 1e-2)| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.460765 | 0.407599 | 0.815200 | 01:25 |

| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.316035 | 0.262926 | 0.895640 | 02:46 |

| 1 | 0.250969 | 0.223144 | 0.908440 | 02:48 |

| 2 | 0.186867 | 0.187719 | 0.926720 | 02:48 |

| 3 | 0.146174 | 0.190528 | 0.927880 | 02:50 |

learn.predict("I really liked that movie!")('pos', TensorText(1), TensorText([5.5877e-04, 9.9944e-01]))learn.predict("I really hated that movie!")('neg', TensorText(0), TensorText([0.9534, 0.0466]))Tabular Data

- data that in in the form of a table

- spreedsheets

- databases

- Comma-separated Values (CSV) files

- model tries to predict the value of one column based on information in other columns

from fastai.tabular.all import *Adult Dataset * from the paper Scaling Up the Accuracy of Naive-Bayes Classifiers: a Decision-Tree Hybrid * contains some demographic data about individuals

path = untar_data(URLs.ADULT_SAMPLE)

print(path)/home/innom-dt/.fastai/data/adult_sample!ls $pathadult.csv export.pkl modelsimport pandas as pd!cat $path/adult.csv | head -1age,workclass,fnlwgt,education,education-num,marital-status,occupation,relationship,race,sex,capital-gain,capital-loss,hours-per-week,native-country,salary

cat: write error: Broken pipepd.read_csv(f"{path}/adult.csv").head()| age | workclass | fnlwgt | education | education-num | marital-status | occupation | relationship | race | sex | capital-gain | capital-loss | hours-per-week | native-country | salary | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 49 | Private | 101320 | Assoc-acdm | 12.0 | Married-civ-spouse | NaN | Wife | White | Female | 0 | 1902 | 40 | United-States | >=50k |

| 1 | 44 | Private | 236746 | Masters | 14.0 | Divorced | Exec-managerial | Not-in-family | White | Male | 10520 | 0 | 45 | United-States | >=50k |

| 2 | 38 | Private | 96185 | HS-grad | NaN | Divorced | NaN | Unmarried | Black | Female | 0 | 0 | 32 | United-States | <50k |

| 3 | 38 | Self-emp-inc | 112847 | Prof-school | 15.0 | Married-civ-spouse | Prof-specialty | Husband | Asian-Pac-Islander | Male | 0 | 0 | 40 | United-States | >=50k |

| 4 | 42 | Self-emp-not-inc | 82297 | 7th-8th | NaN | Married-civ-spouse | Other-service | Wife | Black | Female | 0 | 0 | 50 | United-States | <50k |

dls = TabularDataLoaders.from_csv(path/'adult.csv', path=path, y_names="salary",

cat_names = ['workclass', 'education', 'marital-status', 'occupation',

'relationship', 'race'],

cont_names = ['age', 'fnlwgt', 'education-num'],

procs = [

# Transform the categorical variables to something similar to `pd.Categorical`

Categorify,

# Fill the missing values in continuous columns.

FillMissing,

# Normalize/denorm batch

Normalize

], bs=64, seed=42, num_workers=8)learn = tabular_learner(dls, metrics=accuracy)learn.fit_one_cycle(3)| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 0.383882 | 0.353906 | 0.839834 | 00:02 |

| 1 | 0.369853 | 0.343141 | 0.844134 | 00:02 |

| 2 | 0.353572 | 0.340899 | 0.844441 | 00:02 |

Recommendation Systems

- model tries to predict the rating a user would give for something

from fastai.collab import *path = untar_data(URLs.ML_SAMPLE)

print(path)/home/innom-dt/.fastai/data/movie_lens_sampledls = CollabDataLoaders.from_csv(path/'ratings.csv', bs=64, seed=42, num_workers=8)learn = collab_learner(dls, y_range=(0.5,5.5))

learn.fine_tune(10)| epoch | train_loss | valid_loss | time |

|---|---|---|---|

| 0 | 1.503958 | 1.418632 | 00:00 |

| epoch | train_loss | valid_loss | time |

|---|---|---|---|

| 0 | 1.376203 | 1.359422 | 00:00 |

| 1 | 1.252138 | 1.180762 | 00:00 |

| 2 | 1.017709 | 0.880817 | 00:00 |

| 3 | 0.798113 | 0.741172 | 00:00 |

| 4 | 0.688681 | 0.708689 | 00:00 |

| 5 | 0.648084 | 0.697439 | 00:00 |

| 6 | 0.631074 | 0.693731 | 00:00 |

| 7 | 0.608035 | 0.691561 | 00:00 |

| 8 | 0.609987 | 0.691219 | 00:00 |

| 9 | 0.607285 | 0.691045 | 00:00 |

learn.show_results()| userId | movieId | rating | rating_pred | |

|---|---|---|---|---|

| 0 | 63.0 | 48.0 | 5.0 | 2.935433 |

| 1 | 70.0 | 36.0 | 4.0 | 4.040259 |

| 2 | 65.0 | 92.0 | 4.5 | 4.266208 |

| 3 | 47.0 | 98.0 | 5.0 | 4.345912 |

| 4 | 4.0 | 83.0 | 3.5 | 4.335626 |

| 5 | 4.0 | 38.0 | 4.5 | 4.221953 |

| 6 | 59.0 | 60.0 | 5.0 | 4.432968 |

| 7 | 86.0 | 82.0 | 4.0 | 3.797124 |

| 8 | 55.0 | 86.0 | 4.5 | 3.959364 |

Validation Sets and Test Sets

- changes we make during the training process are influenced by the validation score

- causes subsequent versions of the model to be indirectly shaped by the validation score

- test set

- a set of data that we the model trainers do not look at until we are finished training the model

- used to prevent us from over fitting our training process on the validation score

- cannot be used to improve the model

- especially useful when hiring a 3rd-party to train a model

- keep a test set hidden from the 3rd-party and test and use it to evaluate the final model

Use Judgment in Defining Test Sets

- the validation set and test set should be representative of the new data the model will encounter once deployed

- a validation set for time series data should be a continuous section with the latest dates

- determine if your model will need to handle examples that are qualitatively different from the training set

Jargon

architecture: the functional form of a model

- people sometimes use model as a synonym for architecture

black box: something that gives predictions, but hides how it arrived at the prediction

- common misconception about deep learning models

classification model: attempts to predict a class or category

- does an image contain a cat or a dog

Convolutional Neural Network: a type of neural network that works particularly well for computer vision tasks

epoch

- one complete pass through the dataset

finetune

- only update parts of a pretrained model when training it on a new task

hyperparameters

- parameters about parameters

- higher-level choices that govern the meaning of the weight parameters

independent variable: the data not including the labels

loss

a measure of performance that is used by the training system to update the model weights

meant for machine consumption

depends on the predictions and correct labels

metric

- a function that measures the quality of the model’s prediction using the validation set

- mean for human consumption

model: the combination of an architecture with a particular set of parameters

overfitting

- a large enough model can memorize the training set when allowed to train for long enough

- the single most important and challenging issue when training a model

predictions: output of the model

- predictions are calculated from the independent variable

pretrained model

- a model that has weights which have already been trained on another dataset

- should almost always use a pretrained model when available

- reduces training time for the target dataset

- reduces the amount of data needed for the new task

regression model: attempts to predict one or more numeric quantities

- weather

- location

test set

- a set of data that we the model trainers do not look at until we are finished training the model

- used to prevent us from over fitting our training process on the validation score

- cannot be used to improve the model

- especially useful when hiring a 3rd-party to train a model

validation set:

- used to measure the accuracy of the model during training

- helps determine if the model is overfitting

- important to measure model performance on data that is not used to update its weight values

- we want our model to perform well on previously unseen data, not just the examples it was trained on

transfer learning

- training a pre-trained model on a new dataset and task

- removes the last layer of the pretrained model and replaces it with a new layer for the target dataset

- last layer of the model is referred to as the head

weights: are often called parameters

References

Next: Notes on fastai Book Ch. 2

I’m Christian Mills, a deep learning consultant specializing in practical AI implementations. I help clients leverage cutting-edge AI technologies to solve real-world problems.

Interested in working together? Fill out my Quick AI Project Assessment form or learn more about me.